Video games are built around feedback loops: the player observes the field, makes an informed judgement, and acts. The field changes directly and indirectly, based on the player’s actions, and the loop begins again.

For some games, like chess, this loop could be minutes or even days long, depending on how long the other player takes to make a move. But for a large majority of action, rhythm, and shooting games, the success or failure of this loop can come down to a handful of milliseconds. For these types of games, minimising latency, the systematic delay within a system of signals, is crucial.

Let’s nail down a few of the key terms before we tackle resolving latency.

Frame rate is the frequency at which a signal is processed. In games, this is often directly tied to how many times a second the screen is updated (30, 60, or even more in performance-sensitive games). The frame rate is dependent on the display device and the processing speed of the application running.

When we refer to a single frame, that represents one update of the game. This may be a single cycle of visual rendering, or a cycle of game logic processing (coincidentally, these two usually work in parallel on modern multi-threaded devices).

Visual latency is directly related to frame rate, and is the time it takes for a frame of graphics to make it onto the screen. Game engine performance and display types have a big impact on this.

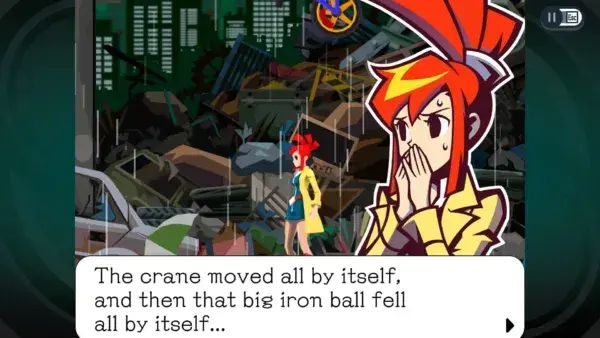

Some of the tapping minigames in Hexagroove require 115ms of precision to pull off – seven frames at 60fps!

Aural latency is the time it takes for audio to reach the player’s ears via headphones or speakers. It may come from a hardware buffer size, audio driver, AV receiver, or even something at the beginning of the chain: the path through various audio effects the application is running (such as delay or reverb). Sound through air itself is slow and can generate up to 3ms of latency per metre from speakers to sofa.

Input latency is the time it takes for a signal from the player to be processed. This could be a touchscreen tap or a button press on a controller. It is often a hardware and software kernel-level constraint.

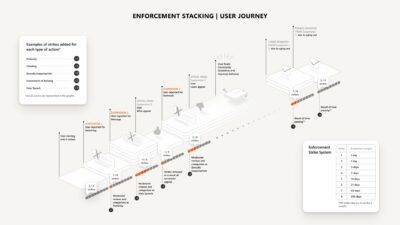

A signal round trip is the path, and all the pieces of latency along the way, that fill out the technical side of the player feedback loop. The most common case is the user pressing a button, the game processing it and changing the graphics and sound in some way (for example, a muzzle flash when the player fires a weapon).

The light of the flash and the sound of the gun must make their way to the screen and speakers, respectively, at what the user perceives as the same time, or the lack of realism causes the brain’s disorientation sensors to kick in.

The key with all of these concepts is that they should be synchronised as much as possible to how humans perceive reality, or the entire experience degrades and feels ‘laggy’.

All the pieces of latency building up in a signal round trip.

DELAYS ALL DOWN THE LINE

Latency is present in both the hardware and software layers of the player experience. Each component of the player feedback loop bears some, and taken together, we have the worst-case scenario for maximum latency. In hardware, there is latency in the input device.

In general, wireless input is slower than tethered controllers. Touchscreens can be especially problematic, as each maker has a different approach to buffering and processing the input. The same goes for audio, as not only the hardware driver but the software driver may also have a substantially large buffer size.

These complications have traditionally made developing performant applications for Android much more difficult than iOS. The refresh rate of the display device is also a factor. Beyond the hardware and kernel/OS layers, the game engine may be configured with several frames of buffering to smooth out visual hitches for graphically intense games.

Frame rates have been crucial in competition gaming for more than 25 years, whether it’s the original Quake or Quake III Arena.

Total system latency can adversely affect the game experience in a number of ways. Delayed processing of player input can mean the difference between winning and losing a fighting game. In immersive applications like VR, having a low frame rate can induce nausea, otherwise known as ‘VR sickness’, dramatically reducing the appeal for a large number of players.

In rhythm games, when the latency between the graphics, input, and sound is not calibrated properly, players are removed from the experience of the music, and aside from making the game exceedingly difficult, it also leaves the game feeling sloppy, imprecise, and ‘loose’. So now we know the myriad ways latency can ruin an entertainment experience, how can we combat it?

DOWN TO THE METAL

For hardware and OS designers, it’s key that there’s no more buffering than absolutely necessary, and also that threads are given appropriate priority to do their tasks. All modern devices have multiple cores for processing data, so it’s important that one is given top priority, specifically for a task like audio processing, in what’s known as a real-time thread.

The audio thread should be guaranteed to not be interrupted by any other process, and have a fairly consistent interval in which it calls to the application requesting processed audio to send to the output hardware. This kind of priority requires that CPU throttling does not occur, which in turn may often result in poor battery performance for applications that do not need it, so aside from game consoles, few devices support this level of resource dedication.

All animations, game logic, and particle triggers are driven by a musical clock in Hexagroove.

Audio hardware buffer size should also be as small as possible – ideally 256 samples or less – so that changes to the audio in terms of effects and playback happen quickly. Similar requirements exist for input processing of touch-panel devices, though lower latency setups in this area also result in higher-cost components and increased energy consumption.

For VR and other graphically immersive experiences, display refresh rates of twice the normal amount (often 90Hz or 120Hz), are critical, so each eye receives its own perspective at a smooth 45–60fps.

As software designers, there are even more opportunities for building a low-latency feedback loop, virtually all of which are independent of concerns like energy consumption. Starting with a low-latency design from the beginning can save a lot of pain later in your game project.

LEAN, MEAN, BUFFER-FREE

At the system level, we can begin with reducing audio-visual buffering to a minimum. Engines like Unity and Unreal have options for the number of frames that are queued up before rendering. You should ideally have a single frame setup where a software renderer thread composes the draw and render state commands on the first frame, and then the GPU processes them immediately, so they appear on the screen a single screen refresh later.

If the game’s running at 60fps, then this means there’s only 16.7ms from the time the game draws the effect and the graphics driver presents it for display. Audio buffering should also be kept to a minimum, and any signal processing must take less than the amount of time it takes for a single frame to be drawn, such that a continuous stream of sound can be sent to the speakers at the same time.

Demons’ Score was released for iOS and Android, though the high-performance touchscreen and short audio signal path made the iPhone version feel much crisper.

Unfortunately, display and audio hardware often has a unique amount of latency in each user’s home gaming setup. To account for this, we must offer some sort of latency calibration and compensation that the user may run on a new setup.

This shifts the graphics and audio in time, such that the two outputs are presented simultaneously, regardless of separate latencies in each layer. Unfortunately, some AV systems aren’t built with gaming in mind, so even with latency compensation, there can be substantial lag that the system can’t resolve. This is why handheld systems and pro gaming setups are preferred for reaction-critical games.

ONE CLOCK TO RULE THEM ALL

In addition to engine and game logic level considerations, we can use a number of features and modifications to create a tighter experience. A Nintendo Switch game I developed, Hexagroove: Tactical DJ, is a good example.

It has you playing as a DJ and composing music for a virtual crowd, so it’s important that sound effects are played in time with the music, accentuating beats and transitions that drive the rhythm. So we pre-cue many of the sound effects and transitions in an audio sequencer, which runs in musical time. This sequencer is so important, in fact, that we use it to drive every aspect of the game: animations, game events, player scoring, and sound effects are all driven by a master music-based clock.

This ensures that all the sounds come in exactly when they should, regardless of system latency. We measure the latency in both the sound to input and screen to input trips separately when the game begins.

Tetris Effect for PlayStation VR runs at 60fps to produce its amazing, particle-based visuals.

This is then used to shift the music forward so it stays in sync with the graphics, while the time-sensitive minigame adjusts itself so the visual and musical state are as closely aligned as possible and the player can respond to the tapping events.

Even with this system in place, however, we found that having the Nintendo Switch docked and connected to a TV and hi-fi system produced so much output latency that calibration alone wasn’t enough. In these cases, it may be necessary to loosen the temporal requirement on players, so that the challenge level on TV is comparable to the tighter feedback loop we get with the Switch and a pair of analogue headphones.

THE BEST EXPERIENCE

There are times, however, when all these adjustments still can’t compensate for the parameters at hand.

A number of years ago, I worked on a mobile rhythm game, Demons’ Score, and the sad reality was the Android devices just had too much system latency at the hardware and OS levels to make the game as tight and pleasurable as it was on iOS.

As a designer in these situations, you may need to ask yourself if the loss of quality is worth the platform, or maybe even consider a genre that doesn’t require such stringent constraints, like a turn-based strategy or puzzle game.

The poster child for poor latency optimisation: Parappa the Rapper’s PS4 re-release played nothing like the CRT-focused original.

The takeaway is to look at your core game and feedback loops and make sure you can deliver an experience of sufficient quality for the platforms you’re targeting. It’s better to know this in pre-production, before investing a lot of time and money in your baby.

So there we have it: we’ve looked at the concept of latency in games, and specifically at what layers it works its way into in a game’s player feedback loop. Some of the factors, like thread priority and hardware buffer sizes, are out of the game designer’s hands.

Others, like engine choice, core game loop setup, and design considerations, can provide a lot of leeway. If they’re approached early in the development, this can help ensure a tight experience that puts the challenge directly in the player’s hands, eyes, and ears.